Table of Contents

Infrasturcture: Deployment

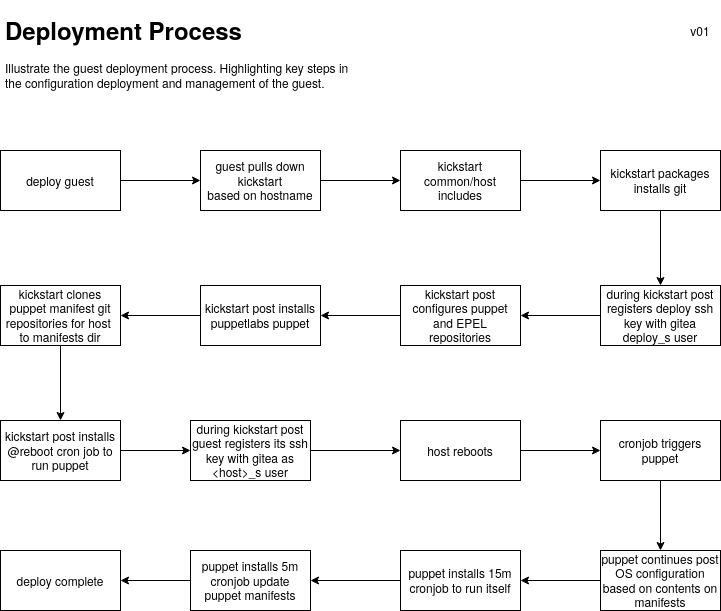

Introduction

We deploy the hosts fresh using a netinst and kickstart (centos)/preseed (debian) depending on OS. We don't clone a base since its a waste of space.

A brand new deploy (locally) takes around 20m to services up for tupper (docker host) and is obviously dependent on the host

The expectation here is this is maintained along with the post configuration so we can redeploy any box to any platform (e.g. locally or if it gets owned) easily and we have a desired/known configuration documented

We will not go into crazy detail duplicating the information available in the kickstart file, go read it and come back with questions. Those questions can feed this documentation (e.g. justifications for decisions)

Kickstart

Kickstarts are managed through a public git repository on our Gitea instance kickstarts . This is required due to the limitations with the kickstart netinst functionality.

Access

There is a default root password redhat!23 installed in the image that needs to be changed after the deploy. This password exists only to allow access to failed deploys for troubleshooting. If you leave this password in place post deploy and the server gets hacked your privileges will be revoked. We will automate the update of the crypt for root via a securely cloned repo

Defining a new guest

- Create a local copy of the git repository

git clone ssh:git@config.tombstones.org.uk:22122/tombstones/kickstarts.git - Create folder for the new guest

- Create a

kickstart.cfgfile for the guest (see other hosts for examples) - Deploy the guest.

Deploying a guest

Deploy a guest using the buildvm script as an unpriviledged user, for now that is vms. It should be as simple as ./buildvm tupper

The installer will do the following (commit decbfbd84071e46a6fc47c82a51dc96cbdbcb019):

- Check for hostname tupper if you want to deploy another host pass –testing as the second param to the script

- Check if we want to clean up any old existence of the vm and vm root drive; generally we will want to

- Check if the required network exists (e.g.

halnet) and attempt create it if not. If it fails to bring up the network for any reason the script will exit - Downloads the remote kickstart from git for the host

- Parses the obtained kickstart and extracts the install media and networking information we need to boot the system

- Matches the host by name in a case statement that will set the expected configuration for the host

- Runs virt-install to install the system, ( read the kickstart files to understand the workings, they are self documenting per host - sai )

- The kickstart post actions clone the expected puppet manifest git repositories to the host from config.tombstones

- The kickstart installation installs a crontab for root (can't remember why its a crontab, there was a reason - sai)

- runs

@rebootapplying a minimal base configuration requirements from puppet-common

- Reboots the host at completion of initial deployment

During installation the host will connect to the VM console so you can monitor the installation

Post reboot the crontab will take over and configure the system, you can expect the installation of the larger hosts to take around 30m

After the initial configuration give the host one final reboot and test all services come back,

- login as root on the console

- check

/tmp/puppet-applyhas completed (we should probably send an email ` reboot- test services

Git integration

There are two types of git integration, the base deployment and the host specific configuration

All hosts during a kickstarted deploy register themselves with the deploy_s account using an access token for the account. This token permits them to register themselves with the read only deploy_s account and clone the puppet_common repository

Each host also has a git account <host shortname>_s this account (for now) needs to be made manually before deployment and added to the servers team. You will need to login as the user and create an access token for the server to use in their post deploy step.

The host registers its sshkey with git and then uses its standard account to clone repositories for the settings.